Instructions for AutoTuner with Ray#

AutoTuner is a “no-human-in-loop” parameter tuning framework for commercial and academic RTL-to-GDS flows. AutoTuner provides a generic interface where users can define parameter configuration as JSON objects. This enables AutoTuner to easily support various tools and flows. AutoTuner also utilizes METRICS2.1 to capture PPA of individual search trials. With the abundant features of METRICS2.1, users can explore various reward functions that steer the flow autotuning to different PPA goals.

AutoTuner provides two main functionalities as follows.

Automatic hyperparameter tuning framework for OpenROAD-flow-script (ORFS)

Parametric sweeping experiments for ORFS

AutoTuner contains top-level Python script for ORFS, each of which implements a different search algorithm. Current supported search algorithms are as follows.

Random/Grid Search

Population Based Training (PBT)

Tree Parzen Estimator (HyperOpt)

Bayesian + Multi-Armed Bandit (AxSearch)

Tree Parzen Estimator + Covariance Matrix Adaptation Evolution Strategy (Optuna)

Evolutionary Algorithm (Nevergrad)

User-defined coefficient values (coeff_perform, coeff_power, coeff_area) of three objectives to set the direction of tuning are written in the script. Each coefficient is expressed as a global variable at the get_ppa function in PPAImprov class in the script (coeff_perform, coeff_power, coeff_area). Efforts to optimize each of the objectives are proportional to the specified coefficients.

Setting up AutoTuner#

To setup AutoTuner, make sure you have a virtual environment set up with

Python 3.9.X. There are plenty of ways to do this, we recommend using

Miniconda,

which is a free minimal installer for the package manager conda.

# set up conda environment

conda create -n autotuner_env python=3.9

conda activate autotuner_env

# install requirements

pip install -r ./tools/AutoTuner/requirements.txt

Input JSON structure#

Sample JSON file for Sky130HD aes design:

Alternatively, here is a minimal example to get started:

{

"_SDC_FILE_PATH": "constraint.sdc",

"_SDC_CLK_PERIOD": {

"type": "float",

"minmax": [

1.0,

3.7439

],

"step": 0

},

"CORE_MARGIN": {

"type": "int",

"minmax": [

2,

2

],

"step": 0

},

}

"_SDC_FILE_PATH","_SDC_CLK_PERIOD","CORE_MARGIN": Parameter names for sweeping/tuning."type": Parameter type (“float” or “int”) for sweeping/tuning"minmax": Min-to-max range for sweeping/tuning. The unit follows the default value of each technology std cell library."step": Parameter step within the minmax range. Step 0 for type “float” means continuous step for sweeping/tuning. Step 0 for type “int” means the constant parameter.

Tunable / sweepable parameters#

Tables of parameters that can be swept/tuned in technology platforms supported by ORFS. Any variable that can be set from the command line can be used for tune or sweep.

For SDC you can use:

_SDC_FILE_PATHPath relative to the current JSON file to the SDC file.

_SDC_CLK_PERIODDesign clock period. This will create a copy of

_SDC_FILE_PATHand modify the clock period.

_SDC_UNCERTAINTYClock uncertainty. This will create a copy of

_SDC_FILE_PATHand modify the clock uncertainty.

_SDC_IO_DELAYI/O delay. This will create a copy of

_SDC_FILE_PATHand modify the I/O delay.

For Global Routing parameters that are set on fastroute.tcl you can use:

_FR_FILE_PATHPath relative to the current JSON file to the

fastroute.tclfile.

_FR_LAYER_ADJUSTLayer adjustment. This will create a copy of

_FR_FILE_PATHand modify the layer adjustment for all routable layers, i.e., from$MIN_ROUTING_LAYERto$MAX_ROUTING_LAYER.

_FR_LAYER_ADJUST_NAMELayer adjustment for layer NAME. This will create a copy of

_FR_FILE_PATHand modify the layer adjustment only for the layer NAME.

_FR_GR_SEEDGlobal route random seed. This will create a copy of

_FR_FILE_PATHand modify the global route random seed.

How to use#

General Information#

The distributed.py script uses Ray’s job scheduling and management to

fully utilize available hardware resources from a single server

configuration, on-premies or over the cloud with multiple CPUs.

The two modes of operation: sweep, where every possible parameter

combination in the search space is tested; and tune, where we use

Ray’s Tune feature to intelligently search the space and optimize

hyperparameters using one of the algorithms listed above. The sweep

mode is useful when we want to isolate or test a single or very few

parameters. On the other hand, tune is more suitable for finding

the best combination of a complex and large number of flow

parameters. Both modes rely on user-specified search space that is

defined by a .json file, they use the same syntax and format,

though some features may not be available for sweeping.

Note

The order of the parameters matter. Arguments --design, --platform and

--config are always required and should precede

Tune only#

AutoTuner:

python3 distributed.py tune -h

Example:

python3 distributed.py --design gcd --platform sky130hd \

--config ../designs/sky130hd/gcd/autotuner.json \

tune

Sweep only#

Parameter sweeping:

python3 distributed.py sweep -h

Example:

python3 distributed.py --design gcd --platform sky130hd \

--config distributed-sweep-example.json \

sweep

List of input arguments#

Argument |

Description |

|---|---|

|

Name of the design for Autotuning. |

|

Name of the platform for Autotuning. |

|

Configuration file that sets which knobs to use for Autotuning. |

|

Experiment name. This parameter is used to prefix the FLOW_VARIANT and to set the Ray log destination. |

|

Resume previous run. |

|

Clean binaries and build files. WARNING: may lose previous data. |

|

Force new git clone. WARNING: may lose previous data. |

|

Additional git clone arguments. |

|

Use latest version of OpenROAD app. |

|

OpenROAD app branch to use. |

|

OpenROAD-flow-scripts branch to use. |

|

OpenROAD-flow-scripts repo URL to use. |

|

Additional arguments given to ./build_openroad.sh |

|

Search algorithm to use for Autotuning. |

|

Evalaute function to use with search algorithm. \ |

|

Number of samples for tuning. |

|

Number of iterations for tuning. |

|

Number of CPUs to request for each tuning job. |

|

Reference file for use with PPAImprov. |

|

Perturbation interval for PopulationBasedTraining |

|

Random seed. |

|

Max number of concurrent jobs. |

|

Max number of threads usable. |

|

The address of Ray server to connect. |

|

The port of Ray server to connect. |

|

Verbosity Level. [0: Only ray status, 1: print stderr, 2: also print training stdout. |

GUI#

Basically, progress is displayed at the terminal where you run, and when all runs are finished, the results are displayed. You could find the “Best config found” on the screen.

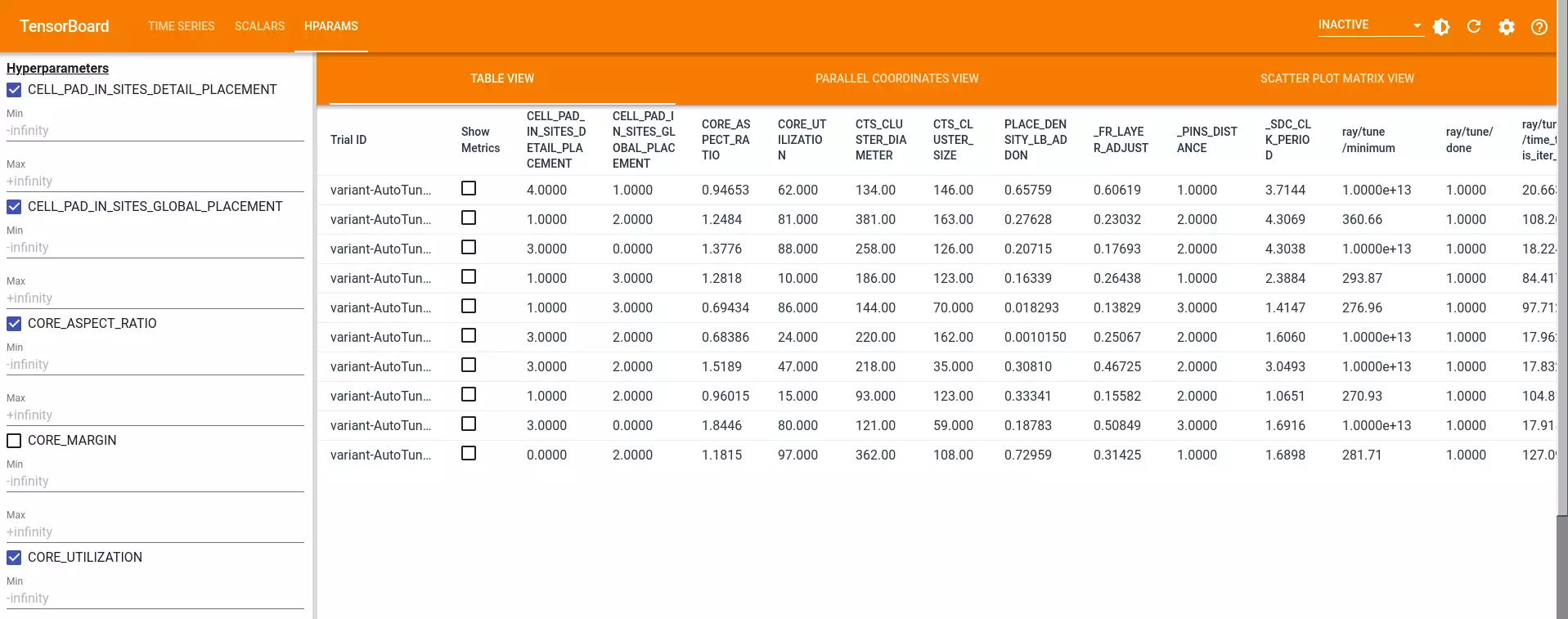

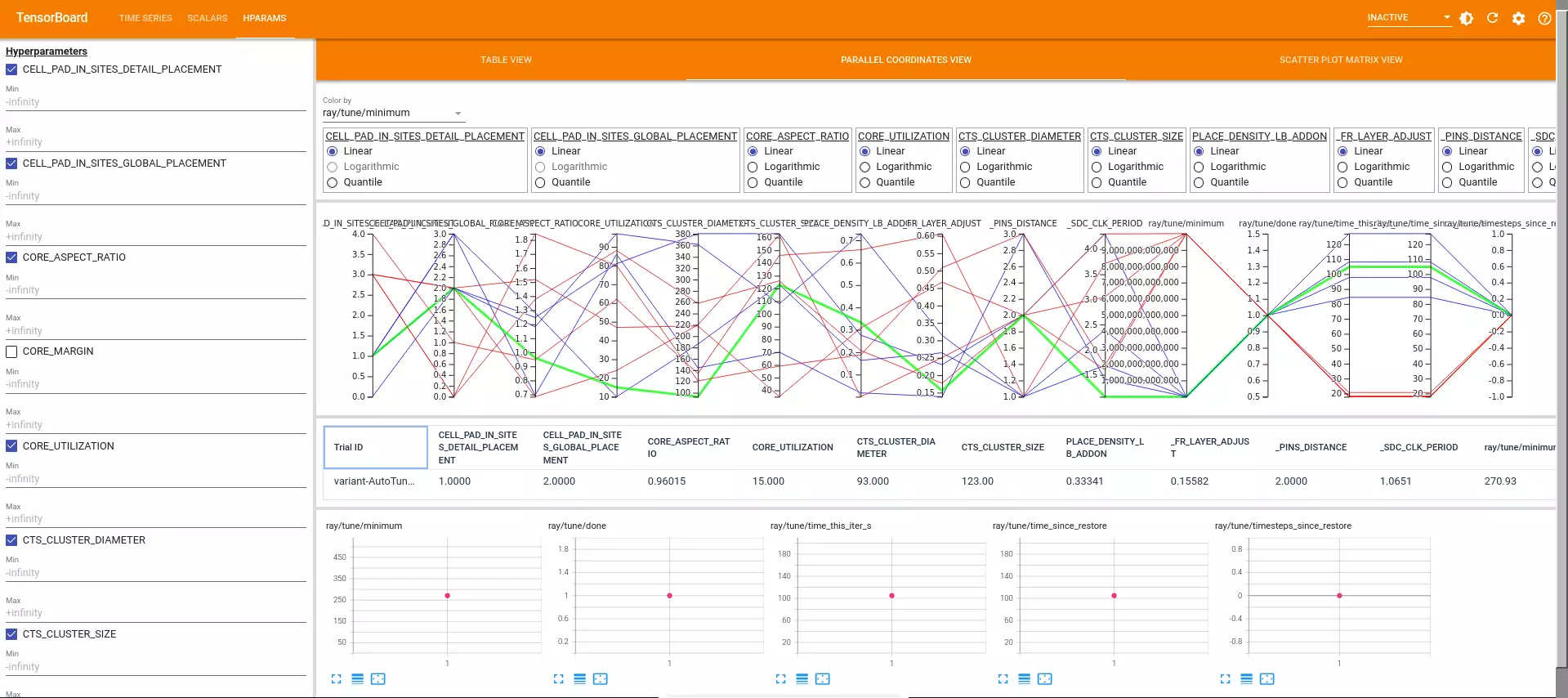

To use TensorBoard GUI, run tensorboard --logdir=./<logpath>. While TensorBoard is running, you can open the webpage http://localhost:6006/ to see the GUI.

We show three different views possible at the end, namely: Table View, Scatter Plot Matrix View and Parallel Coordinate View.

Table View

Scatter Plot Matrix View

Parallel Coordinate View (best run is in green)

Citation#

Please cite the following paper.

Acknowledgments#

AutoTuner has been developed by UCSD with the OpenROAD Project.